Recently, I needed to check and compare Azure consumption for a client. What a repetitive task: download the csv files from the Azure billing portal, open it in Excel to clean/merge/customize it… Would it be great if it could be easily done? Well, it is! Power BI is the perfect tool to do that (and a lot more). In this post, I will explain how I created my Power Query and solved different problem I encountered in my journey.

The Goal

I want PowerBI to read (dynamically) all csv files in a folder and updates all my charts and graph, so I can share them easily why my clients.

To create Power Queries, you can use the new

Power BI Desktop available online for free or Excel. With Excel 2016, the Power query editor tools is included, for the previous version you need to install the

Microsoft Power Query for Excel add-in. In both cases, many tutorials explain how to get started with these tools (see the references at the end of this post).

The Problem

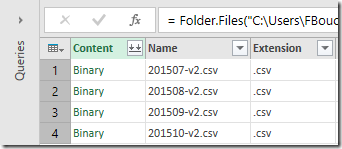

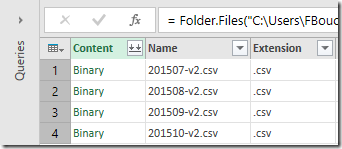

Creating our query should be pretty straight forward, since we can create a Power Query by selecting a folder as a source.

The problem is that our file contains three types of records: Provisioning Status, Statement, and Daily Usage. These “tables” are very different and don’t have the same number of columns. This is why when we try to merge them; we got some Error.

The Solution

The way to solve this problem is to create a function that will parse one file to extract one recordset, and call that function for all the file in the folder.

Note:

The simplest way to get started is to work with one file, then convert it to a function. The way to that is to replace the path of the csv file by a variable that will be passed as a parameter: (filePath) =>.

To keep the code as simple as possible, I kept the function and the looping query separated, but they can be merged in only query.

Here are the queries to extract the Daily Usage (third recordSet) from the csv file and some code description.

// -- fcnCleanOneCSV_v2 ----------------------------------------

(filePath) =>

let

fnRawFileContents = (fullpath as text) as table =>

let

Value = Table.FromList(Lines.FromBinary(File.Contents(fullpath)),Splitter.SplitByNothing())

in Value,

Source = fnRawFileContents(filePath),

#"Daily Usage Row" = Table.SelectRows(Source, each Text.Contains([Column1], "Daily Usage")),

#"DailyPosition" = Table.PositionOf(Source, #"Daily Usage Row" {0}),

#"TopRemoved" = Table.Skip(Source, (DailyPosition + 1)),

#"Result" = Table.PromoteHeaders(TopRemoved)

in

Result

The first part is to load the content of the file as a one column table. Then

DailyPosition is used to store the position where Daily Usage data starts. This value is used in

Table.Skip(Source, (DailyPosition + 1)) to keep only the rows after, since Daily usage is the last recordSet it works perfectly.

//== Process Folder CSV_v2 for Daily Usage==============================

let

Source = Folder.Files("C:\Azure_Consumption_demo\CSV_v2\"),

MergedColumns = Table.CombineColumns(Source,{"Folder Path", "Name"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"Merged"),

RemovedOtherColumns = Table.SelectColumns(MergedColumns,{"Merged"}),

#"Results" = Table.AddColumn(RemovedOtherColumns , "GetCsvs", each fcnCleanOneCSV_v2([Merged])),

#"Removed Columns" = Table.RemoveColumns(Results,{"Merged"}),

#"Expanded GetCsvs" = Table.ExpandTableColumn(#"Removed Columns", "GetCsvs", {"Usage Date,Meter Category,Meter Id,Meter Sub-category,Meter Name,Meter Region,Unit,Consumed Quantity,Resource Location,Consumed Service,Resource Group,Instance Id,Tags,Additional Info,Service Info 1,Service Info 2"}, {"Usage Date,Meter Category,Meter Id,Meter Sub-category,Meter Name,Meter Region,Unit,Consumed Quantity,Resource Location,Consumed Service,Resource Group,Instance Id,Tags,Additional Info,Service Info 1,Service Info 2"}),

#"Demoted Headers" = Table.DemoteHeaders(#"Expanded GetCsvs"),

#"Split Column by Delimiter" = Table.SplitColumn(#"Demoted Headers","Column1",Splitter.SplitTextByDelimiter(","),{"Column1.1", "Column1.2", "Column1.3", "Column1.4", "Column1.5", "Column1.6", "Column1.7", "Column1.8", "Column1.9", "Column1.10", "Column1.11", "Column1.12", "Column1.13", "Column1.14", "Column1.15", "Column1.16"}),

#"Changed Type" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Column1.1", type text}, {"Column1.2", type text}, {"Column1.3", type text}, {"Column1.4", type text}, {"Column1.5", type text}, {"Column1.6", type text}, {"Column1.7", type text}, {"Column1.8", type text}, {"Column1.9", type text}, {"Column1.10", type text}, {"Column1.11", type text}, {"Column1.12", type text}, {"Column1.13", type text}, {"Column1.14", type text}, {"Column1.15", type text}, {"Column1.16", type text}}),

#"Promoted Headers" = Table.PromoteHeaders(#"Changed Type"),

#"Changed Type1" = Table.TransformColumnTypes(#"Promoted Headers",{{"Usage Date", type date}, {"Meter Region", type text}}),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type1","""","",Replacer.ReplaceText,{"Meter Category", "Meter Id", "Meter Sub-category", "Meter Name", "Meter Region", "Unit", "Resource Location", "Consumed Service", "Instance Id", "Tags", "Additional Info", "Service Info 1", "Service Info 2"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Replaced Value",{{"Consumed Quantity", type number}})

in

#"Changed Type2"

From row 1 to 6, we get all the file in the folder then combine columns to get a full path for each file. We then pass that to our function previously defined. With the command

Table.SplitColumn, on line 11, we re-built the result as a table with multiple columns.

The rest of the query is to clean-up the result by changing the column’s type or removing undesired character.

To get the Statement recordSet, it’s the same thing except that we will

Table.Range, since the rows that we are looking for are between Provisioning Status and Daily Usage.

//== fcnGetStatement ==========================================

(filePath) =>

let

fnRawFileContents = (fullpath as text) as table =>

let

Value = Table.FromList(Lines.FromBinary(File.Contents(fullpath)),Splitter.SplitByNothing())

in Value,

Source = fnRawFileContents(filePath),

#"Daily Usage Row" = Table.SelectRows(Source, each Text.Contains([Column1], "Daily Usage")),

#"DailyPosition" = Table.PositionOf(Source, #"Daily Usage Row" {0}),

#"Statement Row" = Table.SelectRows(Source, each Text.Contains([Column1], "Statement")),

#"StatementPosition" = Table.PositionOf(Source, #"Statement Row" {0}),

#"SelectedRows" = Table.Range(Source,(StatementPosition+1),(DailyPosition - StatementPosition )-2),

#"Result" = Table.PromoteHeaders(SelectedRows)

in

Result

And once again we loop through every file and do some clean-up.

//== Query Statements ========================================

let

Source = Folder.Files("C:\Azure_Consumption_demo\CSV_v2\"),

MergedColumns = Table.CombineColumns(Source,{"Folder Path", "Name"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"Merged"),

RemovedOtherColumns = Table.SelectColumns(MergedColumns,{"Merged"}),

#"Results" = Table.AddColumn(RemovedOtherColumns , "GetCsvs", each fcnGetStatement([Merged])),

#"Removed Columns" = Table.RemoveColumns(Results,{"Merged"}),

#"Expanded GetCsvs" = Table.ExpandTableColumn(#"Removed Columns", "GetCsvs", {"Billing Period,Meter Category,Meter Sub-category,Meter Name,Meter Region,SKU,Unit,Consumed Quantity,Included Quantity,Within Commitment,Overage Quantity,Currency,Overage,Commitment Rate,Rate,Value"}, {"Billing Period,Meter Category,Meter Sub-category,Meter Name,Meter Region,SKU,Unit,Consumed Quantity,Included Quantity,Within Commitment,Overage Quantity,Currency,Overage,Commitment Rate,Rate,Value"}),

#"Demoted Headers" = Table.DemoteHeaders(#"Expanded GetCsvs"),

#"Split Column by Delimiter" = Table.SplitColumn(#"Demoted Headers","Column1",Splitter.SplitTextByDelimiter(",", QuoteStyle.Csv),{"Column1.1", "Column1.2", "Column1.3", "Column1.4", "Column1.5", "Column1.6", "Column1.7", "Column1.8", "Column1.9", "Column1.10", "Column1.11", "Column1.12", "Column1.13", "Column1.14", "Column1.15", "Column1.16"}),

#"Promoted Headers" = Table.PromoteHeaders(#"Split Column by Delimiter"),

#"Replaced Value" = Table.ReplaceValue(#"Promoted Headers","""","",Replacer.ReplaceText,{"Meter Category", "Meter Sub-category", "Meter Name", "Meter Region", "SKU", "Unit"})

in

#"Replaced Value"

Once all that is done… Now the fun can begin!

References

Slaying the Dragon: A Secret History of Dungeons & Dragons

Slaying the Dragon: A Secret History of Dungeons & Dragons  Can't Hurt Me: Master Your Mind and Defy the Odds

Can't Hurt Me: Master Your Mind and Defy the Odds