In this post, I will share with you how I optimized a three hours process into a two... minutes automated customizable solution using Azure Logic App and API App.

Quick Context

For the past two hundred fifty something weeks every Monday I share my reading notes. Those are articles that I read during the previous week with a little comment; my 2 cents about it. I read all those books, articles and posts on my e-book reader; In this case a Kindle whitepaper. I use an online service call Readability that can easily clean (remove everything except the text and useful images) the article and send it to my e-book reader. All the articles are kept as a bookmark list.

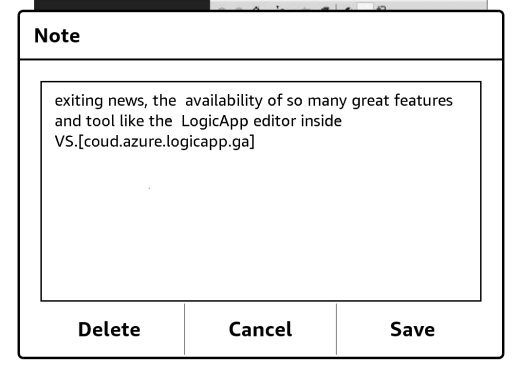

When I have time I read what's on my list. Each time I'm done, I add a note ended by tags between square brackets, those will be use later for research and filter.

Every Sunday, I extract the notes, then search in Readability to find back the URL and build with all this my post for the next morning. A few years ago, I did a first optimization using a Ruby script and I blogged about it here. While it's been working very well all those years, I wanted to change it so I do not have to install Ruby and the Gems on every machine I use.

Optimization Goals

Here is the list of things I wanted for this new brew of the Reading notes post generator:- No install required

- not device or service couple.

- generate json verrsion for future purpuse

- cheap

Optimization Plan

Azure Logic App is the perfect candidate for this optimization because it doesn't require any local installation. Since it a flow between connectors any of them can be changed to please the user. In fact, that was the primary factor that made me picked Azure Logic App. For example, i the current solution, I'm using OneDrive as an initial drop zone. If you your environment you would prefer using DropBox instead of OneDrive, you just need to switch connectors, and nothing else will be affected. Another great advantage is that Azure Logic App is part of the App service ecosystem so all those components are compatible.Here is the full process plan, at the high level.

- Drop the

My Clippings.txtfile in an OneDrive folder. - Make a copy of the file In Azure Blob Storage, using the Blob Storage built-in connector.

- Parse the

My Clippings.txtfile to extract all the clippings (notes) since last extraction, using my custom My Clippings API App. - For each note,

- Get the URL where that post is coming from, using my custom Readability Api App.

- Extract the tags and category (first tag is used as a category), using my custom Azure Function.

- Serialize the note with all the information in json.

- Save to Azure Blob Storage the json file in a temporary container.

- Generate a summary of all json files using my last custom ReadingNotes API App. It also saved a json and Markdon files of the summary.

Let's see more in details

Many great tutorials are already available about how to create Azure Logic App or Azure API App, so in this post, I won't explain how to create them, but mostly share with you some interesting snippets or gotchas I encounter during my journey.In the next screen capture, you can see all the steps contained in my two Logic App. I decided to slip the process because many tasks needed to be done for every note. The main loop (on the left) fetches the notes collection and generates the output. For every note, it will call the Child Logic App (on the right).

The ReadingNotes Builder contains:

- Initial Trigger when a file is created in an OneDrive folder.

- Create a copy of the file in an Azure Blob storage.

- Delete the file in OneDrive.

- Get the configuration file from Azure blob storage.

- Call the API App responsible for parsing the file and extract the recent clippings.

- Call the child Logic App for every clipping returned in the previous steps (foreach loop).

- A. Trigger of the Child Logic App.

- B. Call the API App responsible for searching in the online bookmark collection and return the URL of the article/ post.

- C. Call Azure Function App responsible for extracting tags from the note.

- D. Call Azure Function App responsible for converting the object in json.

- E. Saves the json object in a file in Azure blob storage.

- F. Gets the updated configuration file.

- G. Call Azure Function App responsible for keeping the latest note date.

- H. Update the configuration with the latest date.

- I. Return an HTTP 200 code, so the parent Logic App knows the work is done.

- Call the API App responsible for building the final markdown file.

- Save the markdown file to an Azure blob storage that was returned at the previous step.

- Update the config final.

As an initial trigger, I decided to use OneDrive because it's available from any devices, from anywhere. I just need to drop the MyClippings.txt file in the folder, and the Logic App will start. Here, how I configured it in the editor:

MyClippings API App

Kindle's notes and highlights are saved in a local file named

My Clippings.txt. To be able to manipulate my notes, I needed a parser. Ed Ryan created the excellent [KindleClippings][KindleClippings], an open-source project available on github. In the actual project, I only wrapped that .Net Parser in an Azure API App. Wrapping the API in an API App will help me later to manage security, metrics, and it will simplify the connection in all other Azure solutions app.I won't go into the detail on how to create an Azure API App, a lot of great documentation is available online. However, you will need at least the Azure .Net SDK 2.8.1 to create an API App project.

Swagger API metadata and UI are a must so don't forget to un-comment that section in the SwaggerConfig file.

I needed an array of notes taken after a specific date. All the heavy work is done in [KindleClippings][KindleClippings] library. The

ArrayKindleClippingsAfter method gets the content of the My Clippings.txt file (previously saved in Azure blob storage), and pass it to the KindleClippings.Parse method. Then using Linq, only return the notes taken after the last ReadingNotes publication date. All the code for this My Clippings API App is available on github, but here the method:public Clipping[] ArrayKindleClippingsAfter(string containername, string filename, string StartDate)

{

var blobStream = StorageHelper.GetStreamFromStorage(containername, filename);

var clippings = KindleClippings.MyClippingsParser.Parse(blobStream);

var result = (from c in clippings

where c.DateAdded >= DateTime.Parse(StartDate)

&& c.ClippingType == ClippingTypeEnum.Note

select c as KindleClippings.Clipping).ToArray<Clipping>();

return result;

}Once the Azure API App is deployed in Azure it could be easily called from the Azure Logic App.

A great thing to notice here is that because Azure Logic App is aware of the required parameters and remembers all the information from the previous steps (show in different color), it will be a very simple to configure the call.

Azure Logic App calling another Azure Logic App

Now that we have an array of note, we will be able to loop through each of them to execute other steps. When I wrote the App, the only possibility way to execute multiple steps in a loop, was to call another Azure Logic App. The child Logic App will by trigger by an HTTP POST and will return an HTTP Code 200 when it's done. A json schema is required to define the input parameters. A great tool to get that done easily is http://jsonschema.net.

Readability API App

Readability is an online bookmark service that I'm using for many years now. It offers many API to parse or search article and bookmarks. I found and great .Net wrapper on github called CSharp.Readability that was written by Scott Smith. I could have called directly the Readability from Logic App. However, I needed a little more so I decided to use Scott's version as my base for this API App.From the clipping collection returned at the previous step, I only have the title and need to retrieve the URL. To do this, I added a recursive method call SearchArticle.

private BookmarkDetails SearchArticle(string Title, DateTime PublishDate, int Pass)

{

var retryFactor = 2 * Pass;

var fromDate = PublishDate.AddDays(-1 * retryFactor);

var toDate = PublishDate.AddDays(retryFactor);

var bookmarks = RealAPI.BookmarkOperations.GetAllBookmarksAsync(1, 50, "-date_added", "", fromDate, toDate).Result;

var result = from b in bookmarks.Bookmarks

where b.Article.Title == Title

select b as BookmarkDetails;

if (result.Count() > 0)

{

return result.First<BookmarkDetails>();

}

if (Pass <= 3)

{

return SearchArticle(Title, PublishDate, Pass + 1);

}

return null;

}Azure Function: Extract tags

While most of the work was done in different API, I needed little different tools. Many possibilities but I decided to take advantage of the new Azure Function App. They just sit there waiting to be use! The ReadingNotes Builder uses three Azure Function App, let me share one of them: ExtractTags. An interesting part with function is that you can configure them to get triggered by some event or to act as Webhook.

To create a Function App as Webhook you can use one of the templates when you create the new Function. Or from the code editor in the Azure Portal, you can click on the

Integrate tab and configure it.

Once it's done you are ready to write the code. Once again, this code is very simple. It started by validating the input. I'm expecting a Json with a node called

note, then extract the tags from it and return both parts.public static async Task<object> Run(HttpRequestMessage req, TraceWriter log)

{

string tags;

string cleanNote;

string jsonContent = await req.Content.ReadAsStringAsync();

dynamic data = JsonConvert.DeserializeObject(jsonContent);

if (data.note == null ) {

return req.CreateResponse(HttpStatusCode.BadRequest, new {

error = "Please pass note properties in the input object"

});

}

string note = $"{data.note}";

int posTag = note.LastIndexOf('[')+1;

if(posTag > 0){

tags = note.Substring(posTag, note.Length - posTag-1);

cleanNote = note.Substring(0,posTag-1);

}

else{

tags = "";

cleanNote = note;

}

return req.CreateResponse(HttpStatusCode.OK, new {

tags = $"{tags}",

cleanNote = $"{cleanNote}"

});

}Now, to call it from the Azure Logic App, You will need first to

Show Azure Function in the same region to see your Function App. And simply add the input; in this case like expected a json with a node called note.